Artificial intelligence technology is rapidly transforming our world, impacting everything from healthcare and finance to entertainment and environmental sustainability. This exploration delves into the core principles of AI, examining its various forms, applications, and societal implications. We’ll journey through the history of AI, exploring its evolution from rudimentary algorithms to the sophisticated systems shaping our present and future. The ethical considerations and potential risks associated with this powerful technology will also be addressed, offering a balanced perspective on its transformative potential.

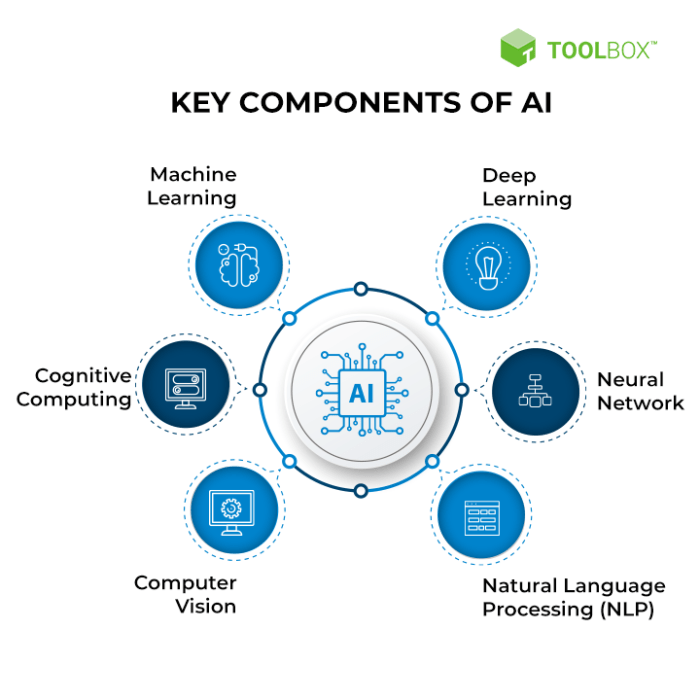

From machine learning and deep learning to natural language processing, we will uncover the diverse techniques driving AI’s capabilities. We’ll examine how AI is revolutionizing data analysis, powering automation across industries, and enhancing cybersecurity measures. Furthermore, we will investigate AI’s role in addressing critical global challenges, such as climate change and healthcare accessibility, and explore its impact on creativity and artistic expression.

Defining Artificial Intelligence Technology

Artificial intelligence (AI) is a broad field encompassing the development of computer systems capable of performing tasks that typically require human intelligence. These tasks include learning, reasoning, problem-solving, perception, and natural language understanding. At its core, AI aims to create machines that can mimic cognitive functions and adapt to new situations without explicit programming for every scenario.

AI’s core principles revolve around the creation of algorithms and models that allow machines to learn from data, make predictions, and improve their performance over time. This learning process often involves statistical analysis, pattern recognition, and optimization techniques. The ultimate goal is to build systems that are not only intelligent but also robust, reliable, and capable of handling complex real-world problems.

Types of Artificial Intelligence, Artificial intelligence technology

The field of AI is vast and encompasses several distinct approaches. Understanding these different types is crucial to grasping the breadth and depth of AI’s capabilities.

- Machine Learning (ML): Machine learning algorithms enable computers to learn from data without being explicitly programmed. Instead of relying on pre-defined rules, ML algorithms identify patterns and relationships within data to make predictions or decisions. Examples include spam filters, recommendation systems, and fraud detection systems. These systems improve their accuracy over time as they are exposed to more data.

- Deep Learning (DL): Deep learning is a subfield of machine learning that uses artificial neural networks with multiple layers (hence “deep”) to analyze data. These networks are inspired by the structure and function of the human brain and can learn complex patterns from vast amounts of data. Deep learning powers applications like image recognition, natural language processing, and self-driving cars. The multiple layers allow for the extraction of increasingly abstract features from the data, leading to superior performance on complex tasks.

- Natural Language Processing (NLP): Natural language processing focuses on enabling computers to understand, interpret, and generate human language. NLP techniques are used in applications like machine translation, chatbots, sentiment analysis, and text summarization. This field is constantly evolving, with ongoing research focused on improving the accuracy and fluency of computer-generated language and the ability of computers to understand nuanced aspects of human communication.

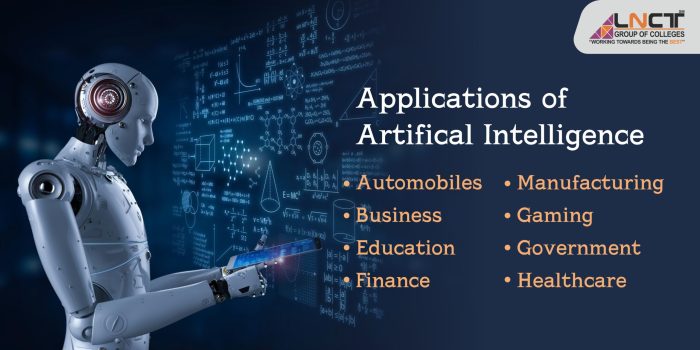

AI Applications Across Industries

AI is rapidly transforming various industries, offering solutions to complex problems and driving innovation.

- Healthcare: AI is used for disease diagnosis, drug discovery, personalized medicine, and robotic surgery. For example, AI algorithms can analyze medical images to detect cancerous tumors with greater accuracy and speed than human radiologists.

- Finance: AI powers fraud detection systems, algorithmic trading, risk management, and customer service chatbots. AI-driven systems can analyze vast amounts of financial data to identify patterns and predict market trends, aiding in investment decisions.

- Manufacturing: AI is used for predictive maintenance, quality control, and process optimization. AI-powered robots can perform repetitive tasks with greater precision and efficiency than human workers, improving productivity and reducing costs.

- Transportation: Self-driving cars, traffic optimization systems, and autonomous drones are all powered by AI. These technologies promise to revolutionize transportation by improving safety, efficiency, and reducing congestion.

AI’s Impact on Society

The rapid advancement and widespread adoption of artificial intelligence (AI) technologies are profoundly reshaping society, presenting both immense opportunities and significant challenges. Understanding these multifaceted impacts is crucial for navigating the ethical, societal, and economic implications of this transformative technology. This section will explore the ethical considerations, potential benefits, and inherent risks associated with AI’s increasing influence on our lives.

AI’s integration into various aspects of our lives necessitates a careful consideration of its ethical implications. The potential for bias in algorithms, the displacement of human workers, and the erosion of privacy are just a few of the concerns that demand proactive and thoughtful solutions. Balancing the benefits of AI with the need to maintain ethical standards and protect human values is a critical challenge facing society.

Ethical Implications of Widespread AI Adoption

The ethical landscape of AI is complex and multifaceted. Algorithmic bias, stemming from biased training data, can perpetuate and amplify existing societal inequalities. For instance, facial recognition systems have been shown to exhibit higher error rates for individuals with darker skin tones, potentially leading to discriminatory outcomes in law enforcement and other applications. Furthermore, the use of AI in autonomous weapons systems raises serious concerns about accountability and the potential for unintended harm. The lack of transparency in some AI systems also presents challenges, making it difficult to understand how decisions are made and to identify and rectify biases. Addressing these issues requires a multi-pronged approach, including the development of ethical guidelines, robust testing and auditing procedures, and increased transparency in AI systems.

Societal Benefits of AI Technology

Despite the ethical challenges, AI offers significant potential societal benefits. In healthcare, AI is being used to improve diagnostics, personalize treatment plans, and accelerate drug discovery. For example, AI-powered systems can analyze medical images with greater speed and accuracy than human radiologists, potentially leading to earlier detection of diseases and improved patient outcomes. In education, AI-powered tutoring systems can provide personalized learning experiences, adapting to individual student needs and improving learning outcomes. Similarly, AI can optimize transportation systems, reducing traffic congestion and improving energy efficiency. The potential applications are vast and continue to expand, promising to improve efficiency, productivity, and quality of life across various sectors.

Potential Challenges and Risks Associated with AI Development

The development and deployment of AI technologies also present significant challenges and risks. One major concern is the potential for job displacement as AI-powered automation replaces human workers in various industries. This requires proactive measures such as retraining and upskilling initiatives to prepare the workforce for the changing job market. Furthermore, the concentration of power in the hands of a few large technology companies that control AI development raises concerns about monopolies and the potential for misuse of AI technology. The development of increasingly sophisticated AI systems also raises concerns about the potential for unintended consequences and the need for robust safety mechanisms to prevent harmful outcomes. The potential for misuse of AI in areas such as surveillance and cyber warfare also necessitates careful consideration and proactive measures to mitigate these risks.

The Evolution of AI Technology

The field of artificial intelligence has undergone a dramatic transformation since its inception, moving from theoretical concepts to sophisticated technologies impacting various aspects of our lives. This evolution is characterized by periods of rapid advancement interspersed with periods of slower progress, often driven by breakthroughs in computing power, algorithm design, and data availability.

The journey of AI can be understood by examining key milestones and comparing the capabilities of early systems with those of modern AI. This comparison highlights the remarkable progress made and provides a glimpse into the potential of future developments.

A Timeline of Significant Milestones in AI History

Tracking the evolution of AI requires acknowledging several pivotal moments. These moments, while not exhaustive, represent significant shifts in the field’s capabilities and direction.

Artificial intelligence is rapidly transforming various sectors, and its impact on game development is particularly noteworthy. The integration of AI into game engines is becoming increasingly sophisticated, and developers are leveraging tools like those offered by unity technologies to create more realistic and engaging gameplay experiences. This allows for more dynamic and intelligent non-player characters (NPCs), ultimately enhancing the overall player experience within the virtual world.

- 1950s-1970s: The Dawn of AI: Early AI research focused on symbolic reasoning and problem-solving using logic-based approaches. Alan Turing’s work on the Turing Test provided a benchmark for machine intelligence. Programs like ELIZA, a natural language processing program, demonstrated early attempts at human-computer interaction.

- 1980s-1990s: Expert Systems and Machine Learning: Expert systems, designed to mimic the decision-making of human experts, gained prominence. The development of machine learning algorithms, particularly backpropagation for neural networks, laid the groundwork for future advancements. However, limitations in computing power and data availability hampered progress.

- 2000s-Present: The Deep Learning Revolution: Advances in computing power, particularly the rise of GPUs, and the explosion of available data fueled the resurgence of deep learning. Deep neural networks achieved breakthroughs in image recognition, natural language processing, and game playing, surpassing human performance in several domains. Examples include AlphaGo’s victory over a world champion Go player and the development of sophisticated language models like GPT-3 and LaMDA.

Comparison of Early AI Systems and Current State-of-the-Art Technologies

Early AI systems, primarily rule-based and reliant on symbolic reasoning, were limited in their ability to handle complex, real-world scenarios. They lacked the adaptability and learning capabilities of modern AI. For example, early chess-playing programs relied on pre-programmed strategies and were easily outmaneuvered by human players with creative strategies. In contrast, current state-of-the-art AI systems leverage machine learning, particularly deep learning, to learn from vast amounts of data and adapt to new situations. This allows them to handle ambiguity, noise, and complexity far beyond the capabilities of their predecessors.

Artificial intelligence technology is rapidly transforming various sectors. Its impact is amplified by advancements in other key technologies , creating a synergistic effect. Consequently, the future of AI hinges on continued innovation across these interconnected fields, promising even more sophisticated applications in the years to come.

| Feature | Early AI Systems | Current State-of-the-Art |

|---|---|---|

| Learning Ability | Limited or non-existent | Highly adaptive and capable of continuous learning |

| Data Handling | Relied on structured, limited data | Processes massive, unstructured datasets |

| Problem-Solving | Rule-based, limited to specific domains | Capable of solving complex, open-ended problems |

| Adaptability | Inflexible and difficult to adapt | Highly adaptable to changing environments |

A Hypothetical Future Scenario Showcasing Advanced AI Capabilities

Imagine a future where AI systems seamlessly integrate into all aspects of our lives. Personalized medicine, powered by AI, diagnoses and treats diseases with unprecedented accuracy and efficiency. AI-driven transportation systems optimize traffic flow, reducing congestion and improving safety. Smart cities utilize AI to manage resources efficiently, minimizing waste and maximizing sustainability. Furthermore, AI assistants anticipate our needs, proactively managing our schedules and providing personalized recommendations. This scenario is not merely science fiction; many of these capabilities are already being developed and deployed, suggesting a future where AI plays an increasingly central role in shaping our world. For instance, self-driving cars are already undergoing extensive testing, and personalized medicine is making significant strides thanks to advancements in AI-powered diagnostics and drug discovery.

AI Algorithms and Techniques

Artificial intelligence relies heavily on sophisticated algorithms to process information, learn from data, and make predictions. These algorithms, the core of AI systems, employ various techniques to achieve intelligent behavior, ranging from simple rule-based systems to complex deep learning models. Understanding these algorithms and their underlying principles is crucial to grasping the capabilities and limitations of AI.

Machine learning algorithms form a significant subset of AI algorithms. They enable systems to learn from data without explicit programming. This learning process involves identifying patterns, making predictions, and improving performance over time. Different types of machine learning algorithms are suited to different tasks and data types.

Machine Learning Algorithm Functionality

Machine learning algorithms generally operate by analyzing large datasets to identify patterns and relationships. This analysis allows the algorithm to build a model that can predict outcomes for new, unseen data. The specific approach varies depending on the type of algorithm and the nature of the data. For example, a supervised learning algorithm learns from labeled data (data where the correct answer is already known), while an unsupervised learning algorithm identifies patterns in unlabeled data. Reinforcement learning algorithms learn through trial and error, receiving rewards for correct actions and penalties for incorrect ones. The algorithm adjusts its behavior based on these rewards and penalties to maximize its cumulative reward.

AI Model Training and Deployment

The process of building and deploying an AI model involves several key steps. First, data is collected and preprocessed. This involves cleaning the data, handling missing values, and transforming it into a format suitable for the chosen algorithm. Next, the model is trained using the prepared data. This involves feeding the data to the algorithm and allowing it to learn the underlying patterns. The training process often involves adjusting parameters of the algorithm to optimize its performance. Model evaluation is a crucial step, where the performance of the trained model is assessed using metrics appropriate for the task. Finally, the model is deployed, making it available for use in a real-world application. This deployment might involve integrating the model into a software application, a web service, or an embedded system.

Comparison of AI Algorithm Types

The choice of algorithm depends heavily on the specific task and the nature of the available data. Different algorithm types have their strengths and weaknesses.

| Algorithm Type | Training Method | Application Examples | Limitations |

|---|---|---|---|

| Supervised Learning | Learning from labeled data (input-output pairs) | Image classification, spam detection, medical diagnosis | Requires large amounts of labeled data, can overfit if the model is too complex |

| Unsupervised Learning | Learning from unlabeled data, identifying patterns and structures | Customer segmentation, anomaly detection, dimensionality reduction | Difficult to evaluate performance, results can be difficult to interpret |

| Reinforcement Learning | Learning through trial and error, maximizing cumulative reward | Robotics, game playing, resource management | Requires careful design of reward function, can be computationally expensive |

| Deep Learning | Using artificial neural networks with multiple layers to extract features from data | Image recognition, natural language processing, speech recognition | Requires significant computational resources, can be difficult to train and interpret |

AI in Data Analysis

AI is revolutionizing data analysis by enabling the processing and interpretation of massive datasets far beyond human capabilities. Its ability to identify patterns, anomalies, and correlations within complex data allows for more accurate insights and predictions, leading to better decision-making across various fields. This section explores how AI tackles large datasets, examines AI-powered visualization, and illustrates its predictive power through a hypothetical scenario.

AI’s use in analyzing large datasets leverages several techniques. Machine learning algorithms, particularly deep learning models, excel at uncovering hidden relationships in high-dimensional data. These algorithms can handle both structured data (like spreadsheets) and unstructured data (like text and images), allowing for comprehensive analysis. For example, Natural Language Processing (NLP) techniques can analyze vast amounts of text data to extract sentiment, identify key topics, and understand customer opinions. Similarly, computer vision algorithms can process images and videos to identify objects, track movements, and extract relevant features. These powerful tools allow for the extraction of valuable insights that would be impossible to obtain through manual analysis.

AI-Powered Data Visualization Techniques

Effective data visualization is crucial for understanding complex datasets. AI enhances this process by automating the creation of insightful visualizations and identifying the most effective ways to represent the data. For instance, AI can automatically generate interactive dashboards that allow users to explore data dynamically. It can also identify key patterns and outliers and highlight them visually, making it easier to spot trends and anomalies. Furthermore, AI can create sophisticated visualizations, such as network graphs illustrating relationships between data points or 3D representations of multi-dimensional data, offering a richer understanding than traditional methods. Consider, for example, a geographical heatmap automatically generated by AI to show the distribution of a disease outbreak, instantly highlighting high-risk areas for targeted intervention.

AI’s Role in Predictive Analytics

Predictive analytics uses historical data to forecast future outcomes. AI significantly enhances this process by developing sophisticated predictive models. For instance, consider a hypothetical scenario involving a major e-commerce company. By analyzing past customer purchase data, website browsing history, and demographic information, an AI system can predict which customers are most likely to churn (cancel their subscription or stop purchasing). This prediction is not simply based on simple averages, but rather complex algorithms identifying nuanced patterns indicative of churn risk. The company can then proactively offer personalized incentives or targeted marketing campaigns to retain these high-value customers, ultimately increasing customer lifetime value and improving profitability. This example demonstrates how AI’s ability to identify complex patterns and relationships in large datasets translates to tangible business benefits.

AI and Automation

The integration of artificial intelligence (AI) into automation processes is rapidly transforming industries worldwide. This fusion is driving unprecedented efficiency gains, but also presents significant challenges, primarily concerning the potential displacement of human workers. Understanding the multifaceted impact of AI-driven automation is crucial for navigating this technological shift effectively.

AI’s impact on automation spans numerous sectors, from manufacturing and logistics to customer service and healthcare. In manufacturing, robots guided by AI algorithms perform complex tasks with greater precision and speed than human workers, leading to increased production and reduced costs. Similarly, in logistics, AI-powered systems optimize delivery routes, manage warehouse operations, and automate sorting processes, resulting in faster and more efficient delivery networks. The healthcare industry utilizes AI for tasks such as image analysis for disease detection, personalized medicine recommendations, and robotic surgery assistance, improving diagnostic accuracy and patient outcomes. Customer service is increasingly automated through AI-powered chatbots and virtual assistants, providing 24/7 support and handling routine inquiries.

Job Displacement Due to AI-Driven Automation

The increasing adoption of AI-driven automation raises concerns about potential job displacement. While AI creates new jobs in areas like AI development and maintenance, it also automates tasks previously performed by humans, leading to job losses in certain sectors. For instance, the automation of manufacturing processes has already resulted in reduced demand for assembly line workers in some factories. Similarly, the rise of AI-powered chatbots has impacted the employment of customer service representatives in certain companies. The extent of job displacement varies depending on the industry and the specific tasks automated. Some studies predict significant job losses in the coming decades, while others emphasize the potential for AI to create new job opportunities and augment existing roles. However, the transition period can be challenging for workers whose jobs are directly affected by automation. For example, the trucking industry faces potential disruption from the development of self-driving vehicles.

Strategies for Mitigating Negative Effects of AI-Driven Job Displacement

Addressing the potential negative consequences of AI-driven job displacement requires proactive strategies focusing on reskilling and upskilling the workforce, providing social safety nets, and fostering a collaborative approach between governments, businesses, and educational institutions.

One crucial strategy involves investing heavily in education and training programs that equip workers with the skills needed for jobs in the AI-driven economy. This includes providing opportunities for reskilling and upskilling programs focused on areas like data science, AI development, and cybersecurity. Furthermore, governments can play a crucial role by implementing policies that support workforce transitions, such as providing unemployment benefits and job placement services for individuals affected by automation. These programs could include financial assistance for retraining and support for entrepreneurship initiatives.

Another key aspect is fostering collaboration between businesses, educational institutions, and government agencies to develop effective strategies for managing the transition to an AI-driven economy. This collaboration could involve creating industry-specific training programs, developing apprenticeship programs, and promoting lifelong learning opportunities. Finally, exploring alternative economic models, such as universal basic income (UBI), could provide a safety net for individuals whose jobs are displaced by automation. While UBI is a complex issue with ongoing debate surrounding its feasibility and implementation, it represents a potential solution to address the potential social and economic inequalities arising from widespread AI-driven automation.

AI and Cybersecurity

Artificial intelligence is rapidly transforming the cybersecurity landscape, offering both powerful new defenses and introducing novel vulnerabilities. Its dual nature presents a complex challenge, demanding a nuanced understanding of its capabilities and limitations to effectively leverage its benefits while mitigating its risks.

AI enhances cybersecurity measures through various applications, significantly improving the speed and accuracy of threat detection and response. This symbiotic relationship is reshaping how we approach digital security.

AI-Enhanced Cybersecurity Measures

AI algorithms are increasingly employed to analyze vast quantities of data, identifying patterns indicative of malicious activity far more efficiently than traditional methods. Machine learning models can be trained to recognize sophisticated attacks, including zero-day exploits, by learning from past incidents and adapting to new threats in real-time. For instance, anomaly detection systems using AI can identify unusual network traffic or user behavior, flagging potential breaches before they escalate. Furthermore, AI-powered security information and event management (SIEM) systems can correlate data from multiple sources, providing a comprehensive view of the security posture and enabling faster incident response. This proactive approach reduces the time it takes to contain and remediate security incidents, minimizing potential damage.

Vulnerabilities of AI Systems to Cyberattacks

Despite its protective capabilities, AI itself is vulnerable to cyberattacks. Adversarial attacks, for example, involve manipulating input data to trick AI models into making incorrect predictions or taking undesirable actions. This could involve subtly altering images to bypass facial recognition systems or injecting malicious code into training data to compromise the accuracy of a threat detection model. Moreover, AI systems are often reliant on large datasets, which can be targets for data poisoning attacks, where malicious data is introduced to corrupt the model’s learning process. The complexity of AI algorithms can also make them difficult to audit and understand, making it harder to identify and address vulnerabilities. A well-known example is the vulnerability of deep learning models to adversarial examples, where seemingly insignificant changes to input data can lead to drastically different outputs.

Ethical Considerations of AI in Cybersecurity

The use of AI in cybersecurity raises several ethical concerns. Bias in training data can lead to discriminatory outcomes, for example, disproportionately targeting certain groups of users. The opacity of some AI algorithms, particularly deep learning models, can make it difficult to understand their decision-making processes, raising concerns about accountability and transparency. Furthermore, the potential for AI-powered surveillance technologies to infringe on privacy rights requires careful consideration and robust regulatory frameworks. The autonomous nature of some AI systems also raises questions about the appropriate level of human oversight and the potential for unintended consequences. For example, an AI system tasked with automatically blocking suspicious activity might mistakenly block legitimate users, leading to service disruptions. Balancing the benefits of AI with the need to protect fundamental rights is a critical ethical challenge.

AI in Healthcare

Artificial intelligence is rapidly transforming the healthcare landscape, offering the potential to improve diagnosis, treatment, accessibility, and affordability. Its applications range from analyzing medical images to predicting patient outcomes, leading to more efficient and effective healthcare delivery. However, the integration of AI in healthcare also presents significant regulatory and ethical challenges that need careful consideration.

AI applications in medical diagnosis and treatment are already making a tangible impact. Machine learning algorithms, for instance, are being used to analyze medical images such as X-rays, CT scans, and MRIs with a high degree of accuracy, often exceeding the capabilities of human radiologists in detecting subtle anomalies indicative of diseases like cancer. AI-powered diagnostic tools can assist clinicians in making faster and more informed decisions, leading to earlier interventions and improved patient outcomes. Furthermore, AI is being utilized in personalized medicine, tailoring treatment plans based on individual patient characteristics and genetic information, leading to more effective therapies and reduced side effects. For example, AI algorithms can analyze a patient’s genomic data to predict their response to specific drugs, enabling physicians to select the most appropriate medication and dosage.

AI’s Role in Improving Healthcare Accessibility and Affordability

The potential of AI to enhance healthcare accessibility and affordability is significant. In areas with limited access to specialists, AI-powered telemedicine platforms can provide remote consultations and diagnoses, bridging geographical barriers and ensuring that patients in underserved communities receive timely medical attention. AI-driven diagnostic tools can also reduce the workload on healthcare professionals, allowing them to focus on more complex cases and improving overall efficiency. This increased efficiency can translate to lower healthcare costs, making quality care more affordable for a wider population. For example, AI-powered chatbots can handle routine inquiries, freeing up nurses and doctors to address more urgent needs. Furthermore, AI can optimize hospital resource allocation, reducing wait times and improving the overall patient experience. The use of AI in drug discovery and development can also lead to faster and more cost-effective creation of new medications.

Regulatory Challenges of AI in Healthcare

The implementation of AI in healthcare is not without its challenges. One major hurdle is the regulatory landscape. Ensuring the safety and efficacy of AI-powered medical devices and software requires rigorous testing and validation processes. Data privacy and security are also critical concerns, as AI algorithms rely on vast amounts of sensitive patient data. Establishing clear guidelines and regulations for data usage, storage, and security is essential to maintain patient trust and comply with privacy laws like HIPAA in the United States or GDPR in Europe. Furthermore, algorithmic bias is a significant concern; AI algorithms trained on biased data can perpetuate and amplify existing health disparities. Regulatory bodies must ensure that AI systems are developed and deployed in a fair and equitable manner, avoiding biases that could negatively impact certain patient populations. The lack of clear liability frameworks for AI-related medical errors is another challenge that needs to be addressed to foster responsible innovation and adoption of AI in healthcare.

AI and the Environment

Artificial intelligence is rapidly emerging as a powerful tool in the fight against environmental degradation. Its ability to process vast amounts of data, identify patterns, and predict future trends makes it uniquely suited to tackling complex ecological challenges, from climate change mitigation to biodiversity conservation. The applications are diverse and constantly expanding, offering a significant potential for improving sustainability and resource management globally.

AI’s application in environmental protection involves leveraging its analytical capabilities to understand and respond to environmental changes more effectively than traditional methods. This allows for proactive solutions rather than reactive ones, potentially mitigating significant environmental damage.

AI-Driven Precision Agriculture

AI-powered systems are revolutionizing agriculture, optimizing resource use and minimizing environmental impact. Precision agriculture techniques, driven by AI algorithms analyzing satellite imagery, sensor data, and weather patterns, allow farmers to precisely apply water, fertilizers, and pesticides. This targeted approach reduces waste, lowers pollution from runoff, and improves crop yields, leading to increased food production with reduced environmental strain. For example, AI-powered drones can monitor crop health, identifying areas needing attention, thus minimizing the use of resources and reducing the environmental footprint of farming operations. Furthermore, AI can optimize irrigation schedules, ensuring water is used efficiently and preventing water waste.

AI in Climate Change Mitigation

AI plays a crucial role in climate change modeling and prediction. Sophisticated algorithms analyze vast datasets encompassing historical climate data, greenhouse gas emissions, and various environmental factors to create highly accurate climate models. These models are used to predict future climate scenarios, enabling policymakers and researchers to develop effective mitigation strategies. For instance, AI can help optimize the placement of renewable energy sources like wind turbines and solar panels, maximizing energy generation while minimizing environmental disruption. Furthermore, AI can analyze energy consumption patterns to identify areas for improvement and promote energy efficiency.

A Hypothetical Scenario: AI-Powered Forest Fire Prevention

Imagine a scenario where an AI system, constantly monitoring weather patterns, satellite imagery, and historical fire data, identifies a high-risk area for forest fires. The system detects subtle changes in vegetation dryness, wind speed, and temperature, far exceeding the capabilities of human monitoring. Based on this analysis, the AI proactively alerts relevant authorities, allowing for preventative measures such as controlled burns or strategic deployment of firefighting resources. This proactive approach, driven by AI’s predictive capabilities, significantly reduces the scale and impact of potential wildfires, protecting both ecosystems and human settlements. This hypothetical scenario showcases the potential of AI to act as an early warning system, minimizing the devastating effects of natural disasters exacerbated by climate change.

The Future of Artificial Intelligence

Artificial intelligence is rapidly evolving, promising transformative changes across various sectors. Predicting the precise future of AI is inherently challenging, but analyzing current trends and breakthroughs allows us to envision potential developments and their implications. This exploration focuses on likely advancements, emerging challenges, and the long-term societal effects of increasingly sophisticated AI systems.

The next decade will likely witness significant progress in several key areas. Researchers are actively pursuing more robust and explainable AI models, aiming to move beyond the “black box” nature of many current systems. This increased transparency will be crucial for building trust and ensuring responsible AI deployment.

Potential Breakthroughs in AI Research and Development

Several promising avenues of AI research hold the potential for significant breakthroughs. One area is the development of more general-purpose AI, moving beyond narrow AI designed for specific tasks towards systems capable of adapting to a wider range of challenges. This could involve advancements in transfer learning, where knowledge gained in one area is applied to another, and the development of more sophisticated reinforcement learning algorithms that enable AI to learn through trial and error in complex environments. Another exciting area is neuromorphic computing, which mimics the structure and function of the human brain, potentially leading to significantly more energy-efficient and powerful AI systems. Imagine AI systems capable of learning and adapting at speeds and scales currently unimaginable, revolutionizing fields from drug discovery to climate modeling.

Emerging Trends and Challenges in the Field of AI

The rapid advancement of AI also presents significant challenges. One major concern is the ethical implications of increasingly autonomous systems. Ensuring fairness, accountability, and transparency in AI decision-making is crucial to prevent bias and discrimination. Furthermore, the potential for job displacement due to automation requires proactive strategies to reskill and upskill the workforce. Data privacy and security are also paramount concerns, as the reliance on vast datasets for training AI models raises questions about data ownership, protection, and potential misuse. The increasing sophistication of AI also necessitates careful consideration of its potential use in autonomous weapons systems and the need for robust international regulations to prevent unintended consequences.

Long-Term Societal Impact of Advanced AI

The long-term societal impact of advanced AI will be profound and multifaceted. While AI has the potential to significantly improve healthcare, education, and other essential services, it also poses risks. The potential for economic disruption due to automation requires careful planning and mitigation strategies to ensure a just transition. Furthermore, the increasing reliance on AI for decision-making raises concerns about the erosion of human agency and the potential for algorithmic bias to exacerbate existing societal inequalities. Addressing these challenges requires a multidisciplinary approach involving researchers, policymakers, and the public to ensure that AI benefits all of society and minimizes potential harms. This includes fostering open dialogue and collaboration to establish ethical guidelines and regulations that promote responsible AI development and deployment. The future of AI is not predetermined; it will be shaped by the choices we make today.

AI and Creativity: Artificial Intelligence Technology

The intersection of artificial intelligence and creativity is a rapidly evolving field, challenging our understanding of what constitutes art, music, and literature, and who – or what – can be considered an artist. AI systems are no longer just tools assisting human creators; they are actively participating in the creative process, generating original works that raise fascinating questions about authorship, originality, and the very nature of creativity itself.

AI’s ability to learn patterns and generate novel outputs based on these patterns allows it to mimic and even surpass human creativity in certain domains. This capability has led to the creation of impressive AI-generated works, blurring the lines between human and machine artistry.

Examples of AI-Generated Art, Music, and Literature

AI is increasingly used to produce various forms of creative output. For example, Generative Adversarial Networks (GANs) are frequently employed to create stunning visual art. These networks consist of two neural networks – a generator and a discriminator – competing against each other to produce increasingly realistic and creative images. One example is the work of DALL-E 2, which can generate photorealistic images from text prompts. Similarly, AI is composing music across different genres, from classical to pop, using algorithms that learn musical structures and patterns. In literature, AI systems are writing poems, short stories, and even scripts, learning stylistic nuances and narrative structures from vast datasets of existing texts. These range from simple text-based games to more sophisticated narratives, sometimes indistinguishable from human-written works.

Implications of AI’s Role in Creative Fields

The integration of AI into creative fields has significant implications. It presents new opportunities for artists and creators, providing them with powerful tools to enhance their workflows and explore new creative avenues. AI can assist with tasks like generating initial ideas, automating repetitive processes, and exploring variations on a theme. This can lead to increased productivity and allow artists to focus on higher-level creative decisions. However, it also poses challenges, including concerns about job displacement for human artists and the potential devaluation of human creativity. The question of copyright and ownership of AI-generated works also requires careful consideration.

Ethical Considerations of AI-Generated Creative Works

The rise of AI-generated art raises several ethical concerns. One central issue is the question of authorship and ownership. If an AI creates a piece of art, who owns the copyright? Is it the programmer who created the AI, the user who provided the input, or the AI itself? Another concern is the potential for AI to perpetuate biases present in the data it is trained on. If an AI is trained on a dataset that predominantly features a certain style or perspective, it may produce works that reflect and reinforce those biases. Furthermore, the ease with which AI can generate creative content raises concerns about authenticity and originality. The potential for misuse, such as generating deepfakes or creating propaganda, also necessitates careful consideration and responsible development practices.

“The question of authorship is complex. Is the AI merely a tool, like a paintbrush, or is it a collaborator, a co-creator, or even the primary artist?”

Final Thoughts

Artificial intelligence technology stands as a powerful tool with the potential to reshape society profoundly. While its transformative capabilities offer immense benefits across various sectors, responsible development and ethical considerations remain paramount. Understanding the intricacies of AI, its potential risks, and its societal impact is crucial for navigating its evolving landscape and harnessing its potential for positive change. The future of AI is not predetermined; it is a future we collectively shape through informed decisions and responsible innovation.